Great for seamless patterns, abstract drawings, and watercolor-styled images. How to use it and train a neural network on your own pictures?

Download the model here: https://huggingface.co/netsvetaev/netsvetaev-free

AI, ANN and other forms of an artificial Intelligence

Great for seamless patterns, abstract drawings, and watercolor-styled images. How to use it and train a neural network on your own pictures?

Download the model here: https://huggingface.co/netsvetaev/netsvetaev-free

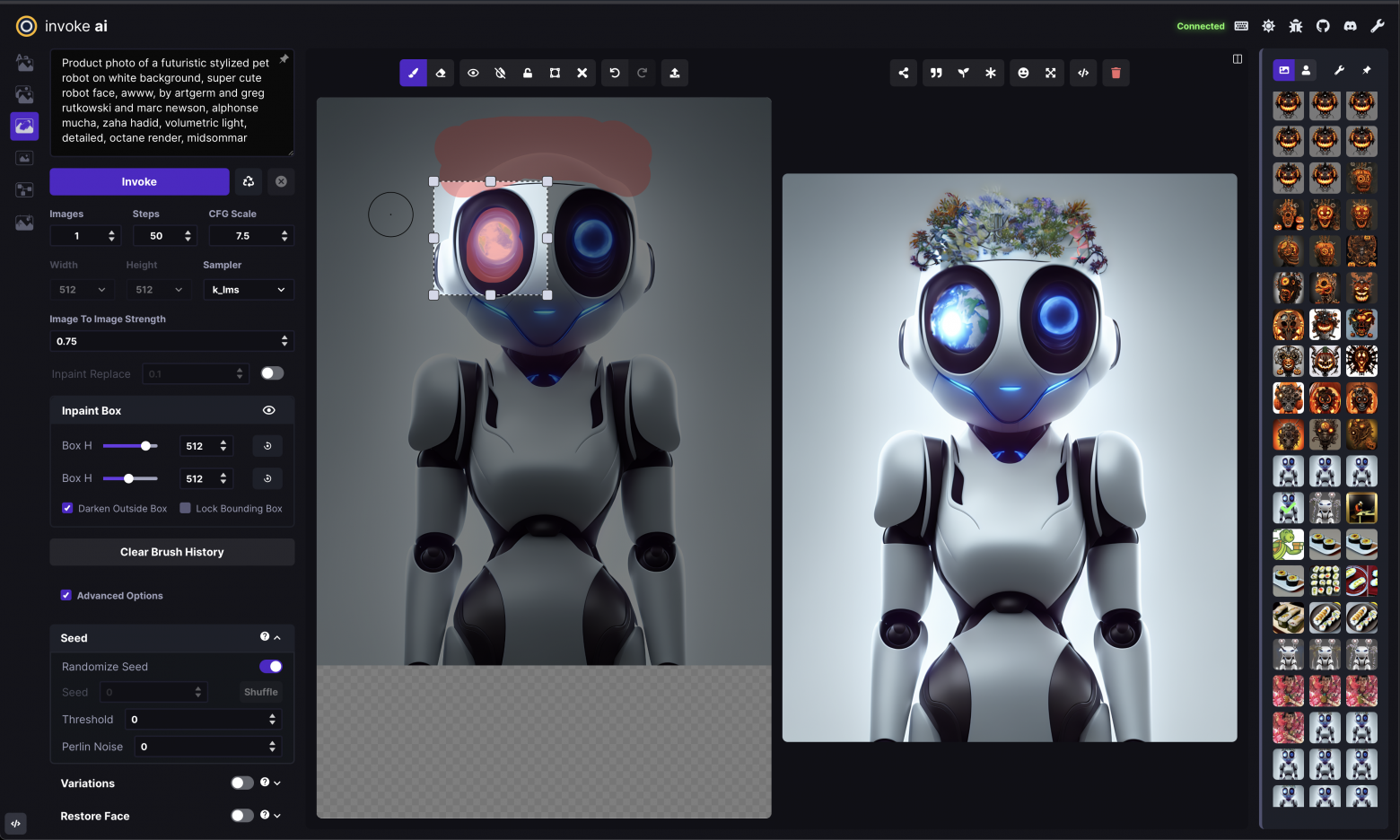

The InvokeAI team is excited to share our latest feature release, with a set of new features, UI enhancements, and CLI capabilities.

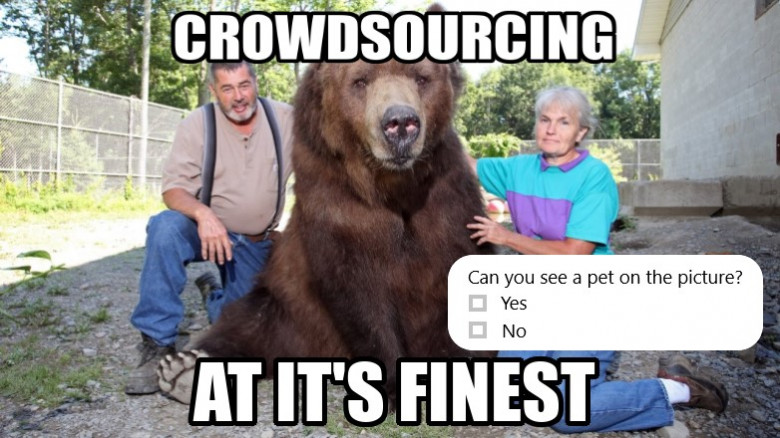

Toloka is a crowdsourcing platform and microtasking project launched by Yandex to quickly markup large amounts of data. But how can such a simple concept play a crucial role in improving the work of neural networks?

FL_PyTorch: Optimization Research Simulator for Federated Learning is publicly available on GitHub.

FL_PyTorch is a suite of open-source software written in python that builds on top of one of the most popular research Deep Learning (DL) frameworks PyTorch. We built FL_PyTorch as a research simulator for FL to enable fast development, prototyping, and experimenting with new and existing FL optimization algorithms. Our system supports abstractions that provide researchers with sufficient flexibility to experiment with existing and novel approaches to advance the state-of-the-art. The work is in proceedings of the 2nd International Workshop on Distributed Machine Learning DistributedML 2021. The paper, presentation, and appendix are available in DistributedML’21 Proceedings (https://dl.acm.org/doi/abs/10.1145/3488659.3493775).

The project is distributed in open source form under Apache License Version 2.0. Code Repository: https://github.com/burlachenkok/flpytorch.

To become familiar with that tool, I recommend the following sequence of steps:

Alexander Volchek, IT entrepreneur, CEO educational platform GeekBrains

Pretty much everyone in the IT community is talking metaverses, NFTs, blockchain and cryptocurrency. This time we will discuss metaverses, and come back to everything else in the letters to follow. Entrepreneurs and founders of tech giants are passionate about this idea, and investors are allocating millions of dollars for projects dealing with metaverses. Let's start with the basics.

Most people fear of artificial intelligence (AI) for the unpredictability of its possible actions and impact [1], [2]. In regard to this technology concerns are voiced also by AI experts themselves - scientists, engineers, among whom are the foremost faces of their professions [3], [4], [5]. And you possibly share these concerns because it's like leaving a child alone at home with a loaded gun on the table - in 2021, AI was first used on the battlefield in completely autonomous way: with an independent determination of a target and a decision to defeat it without operator participation [6]. But let’s be honest, since humanity has taken in the opportunities this new tool could give us, there is already no way back – this is how the law of gengle works [7].

Imagine the feeling of a caveman observing our modern routine world: electricity, Internet, smartphones, robots... etc. In the next two hundred years in large part thankfully to AI humankind will undergo the number of transformations it has since the moment we have learned to control the fire [8]. The effect of this technology will surpass all our previous changes as a civilization. And even as a species, because our destiny is not to create AI, but to literally become it.

The first text-based CAPTCHA ( we’ll call it just CAPTCHA for the sake of brevity ) was used in 1997 by AltaVista search engine. It prevented bots from adding Uniform Resource Locator (URLs) to their web search engine.

Back then it was a decent defense measure. However the progress can't be stopped, and this defense was bypassed using OCR available at those times (for example FineReader).

CAPTCHA became more complex, noise was added to it, along with distortions, so the popular OCRs couldn’t recognize this text. And then OCRs custom made for this task appeared. It costed extra money and knowledge for the attacking side. The CAPTCHA developers were required to understand the challenges the attackers met, what distortions to add, in order to make the automation of the CAPTCHA recognition more complex.

The misunderstanding of the principles the OCRs were based on, some CAPTCHAs were given such distortions, that they were more of a hassle for regular users than for a machine.

OCRs for different types of CAPTCHAs were made using heuristics, and the most complicated part of it was the CAPTCHA segmentation for the stand along symbols, that subsequently could be easily recognized by the CNN (for example LeNet-5), also SVM showed a good result even on the raw pixels.

In this article I’ll try to grasp the whole history of CAPTCHA recognition, from heuristics to the contemporary automated recognition systems. We’ll figure out, if a CAPTCHA is still alive.

I’ll review the yandex.com CAPTCHA. The Russian version of the same CAPTCHA is more complex.

Multimodality has led the pack in machine learning in 2021. Neural networks are wolfing down images, text, speech and music all at the same time. OpenAI is, as usual, top dog, but as if in defiance of their name, they are in no hurry to share their models openly. At the beginning of the year, the company presented the DALL-E neural network, which generates 256x256 pixel images in answer to a written request. Descriptions of it can be found as articles on arXiv and examples on their blog.

As soon as DALL-E flushed out of the bushes, Chinese researchers got on its tail. Their open-source CogView neural network does the same trick of generating images from text. But what about here in Russia? One might say that “investigate, master, and train” is our engineering motto. Well, we caught the scent, and today we can say that we created from scratch a complete pipeline for generating images from descriptive textual input written in Russian.

In this article we present the ruDALL-E XL model, an open-source text-to-image transformer with 1.3 billion parameters as well as ruDALL-E XXL model, an text-to-image transformer with 12.0 billion parameters which is available in DataHub SberCloud, and several other satellite models.

We at Data Science Digest have always strived to ignite the fire of knowledge in the AI community. We’re proud to have helped thousands of people to learn something new and give you the tools to push ahead. And we’ve not been standing still, either.

Please meet Data Phoenix, a Data Science Digest rebranded and risen anew from our own flame. Our mission is to help everyone interested in Data Science and AI/ML to expand the frontiers of knowledge. More news, more updates, and webinars(!) are coming. Stay tuned!

The new issue of the new Data Phoenix Digest is here! AI that helps write code, EU’s ban on biometric surveillance, genetic algorithms for NLP, multivariate probabilistic regression with NGBoosting, alias-free GAN, MLOps toys, and more…

If you’re more used to getting updates every day, subscribe to our Telegram channel or follow us on social media: Twitter, Facebook.

The new issue of DataScienceDigest is here!

The impact of NLP and the growing budgets to drive AI transformations. How Airbnb standardized metric computation at scale. Cross-Validation, MASA-SR, AgileGAN, EfficientNetV2, and more.

If you’re more used to getting updates every day, subscribe to our Telegram channel or follow us on social media: Twitter, LinkedIn, Facebook.

The new issue of DataScienceDigest is here!

Machine learning in healthcare, the top 10 TED talks on AI, fraud detection in Uber, DatasetGAN, Text-to-Image generation via transformers, and more…

New issue of DataScienceDigest is here! OpenAI is launching a $100 million startup fund, Albumentations 1.0 has been released, lessons on ML platforms, image cropping on Twitter, and more.

The new issue of Data Science Digest is here! Hop to learn about the latest news, articles, tutorials, research papers, and event materials on DataScience, AI, ML, and BigData. All sections are prioritized for your convenience. Enjoy!

As we all are aware of the fact that the digital market is heavily leaning towards a reliable UX-driven process, app development has become quite complex, especially for targeting the industry for mobile platforms.

For every organization, creating a product that is beneficial for their customer needs always comes up with a plethora of challenges.

From the technical point of time, there are various challenges that every business faces, including selecting the right platform for the app, the right technology stack or framework, and creating an app that fulfills the needs and expectations of customers.

Similarly, there are more challenges that every business faces and needs to cope with while creating its dream product.

So, what to do??

Well, what if I say that the answer to all your queries and questions is Flutter app development with Artificial Intelligence (AI) integration……

Surprised? Wondering how?

Well, AI in Flutter app development is one of the best advancements in the software market. The concept of AI was first introduced during the 20th century with loads of innovations and advancements that we are still integrating into our mobile app development.

But, what are Artificial Intelligence and Flutter app development?

Hi All,

I’m pleased to invite you all to enroll in the Lviv Data Science Summer School, to delve into advanced methods and tools of Data Science and Machine Learning, including such domains as CV, NLP, Healthcare, Social Network Analysis, and Urban Data Science. The courses are practice-oriented and are geared towards undergraduates, Ph.D. students, and young professionals (intermediate level). The studies begin July 19–30 and will be hosted online. Make sure to apply — Spots are running fast!

If you’re more used to getting updates every day, follow us on social media:

Telegram

Twitter

LinkedIn

Facebook

Regards,

Dmitry Spodarets.

So we have already played with different neural networks. Cursed image generation using GANs, deep texts from GPT-2 — we have seen it all.

This time I wanted to create a neural entity that would act like a beauty blogger. This meant it would have to post pictures like Instagram influencers do and generate the same kind of narcissistic texts. \

Initially I planned to post the neural content on Instagram but using the Facebook Graph API which is needed to go beyond read-only was too painful for me. So I reverted to Telegram which is one of my favorite social products overall.

The name of the entity/channel (Aida Enelpi) is a bad neural-oriented pun mostly generated by the bot itself.

Hi All,

I have some good news for you…

Data Science Digest is back! We’ve been “offline” for a while, but no worries — You’ll receive regular digest updates with top news and resources on AI/ML/DS every Wednesday, starting today.

If you’re more used to getting updates every day, follow us on social media:

Telegram - https://t.me/DataScienceDigest

Twitter - https://twitter.com/Data_Digest

LinkedIn - https://www.linkedin.com/company/data-science-digest/

Facebook - https://www.facebook.com/DataScienceDigest/

And finally, your feedback is very much appreciated. Feel free to share any ideas with me and the team, and we’ll do our best to make Data Science Digest a better place for all.

Regards,

Dmitry Spodarets.

Author: Sergey Lukyanchikov, Sales Engineer at InterSystems

What is Distributed Artificial Intelligence (DAI)?

Attempts to find a “bullet-proof” definition have not produced result: it seems like the term is slightly “ahead of time”. Still, we can analyze semantically the term itself – deriving that distributed artificial intelligence is the same AI (see our effort to suggest an “applied” definition) though partitioned across several computers that are not clustered together (neither data-wise, nor via applications, not by providing access to particular computers in principle). I.e., ideally, distributed artificial intelligence should be arranged in such a way that none of the computers participating in that “distribution” have direct access to data nor applications of another computer: the only alternative becomes transmission of data samples and executable scripts via “transparent” messaging. Any deviations from that ideal should lead to an advent of “partially distributed artificial intelligence” – an example being distributed data with a central application server. Or its inverse. One way or the other, we obtain as a result a set of “federated” models (i.e., either models trained each on their own data sources, or each trained by their own algorithms, or “both at once”).

Distributed AI scenarios “for the masses”

We will not be discussing edge computations, confidential data operators, scattered mobile searches, or similar fascinating yet not the most consciously and wide-applied (not at this moment) scenarios. We will be much “closer to life” if, for instance, we consider the following scenario (its detailed demo can and should be watched here): a company runs a production-level AI/ML solution, the quality of its functioning is being systematically checked by an external data scientist (i.e., an expert that is not an employee of the company). For a number of reasons, the company cannot grant the data scientist access to the solution but it can send him a sample of records from a required table following a schedule or a particular event (for example, termination of a training session for one or several models by the solution). With that we assume, that the data scientist owns some version of the AI/ML mechanisms already integrated in the production-level solution that the company is running – and it is likely that they are being developed, improved, and adapted to concrete use cases of that concrete company, by the data scientist himself. Deployment of those mechanisms into the running solution, monitoring of their functioning, and other lifecycle aspects are being handled by a data engineer (the company employee).

Technology is as adaptable and compatible as mankind; it finds its way through problems and situations. 2020 was one such package of uncertain events that forced businesses to adapt to digital transformation, even to an extent where many companies started to consider the remote work culture to be a beneficiary long-term model. Technological advancements like Hyper automation, AI Security, and Distributed cloud showed how any people-centric idea could rule the digital era. The past year clearly showed the boundless possibilities through which technology can survive or reinvent itself. With all those learnings let's deep-dive and focus on some of the top technology trends to watch out for in 2021.

There’s a lot of talk about machine learning nowadays. A big topic – but, for a lot of people, covered by this terrible layer of mystery. Like black magic – the chosen ones’ art, above the mere mortal for sure. One keeps hearing the words “numpy”, “pandas”, “scikit-learn” - and looking each up produces an equivalent of a three-tome work in documentation.

I’d like to shatter some of this mystery today. Let’s do some machine learning, find some patterns in our data – perhaps even make some predictions. With good old Python only – no 2-gigabyte library, and no arcane knowledge needed beforehand.

Interested? Come join us.